What Is Face Analysis?Face analysis detects faces in an image or video and can help determine characteristics of the face such as the gender, emotion, and age of the person in the image. It should not be confused with face recognition, which involves identifying which person is seen in an image.

Here are a few techniques commonly used in face analysis:

1. Identifying features in a human face that distinguish male and female faces, to facilitate gender detection.

2. Identifying markers such as pupil position, eyebrows, and lip borders, which change with age, to facilitate age detection.

3. Using sentiment estimation to detect facial expressions and determine the probability of emotions like happiness, sadness, surprise, or anger.

Main Tasks Handled by Face Analysis AlgorithmsFacial Landmarks

Facial landmarks are areas of interest in a human face, also known as keypoints, including:

1. Eyebrows

2. Eyes

3. Nose

4. Mouth

5. Jaw lines

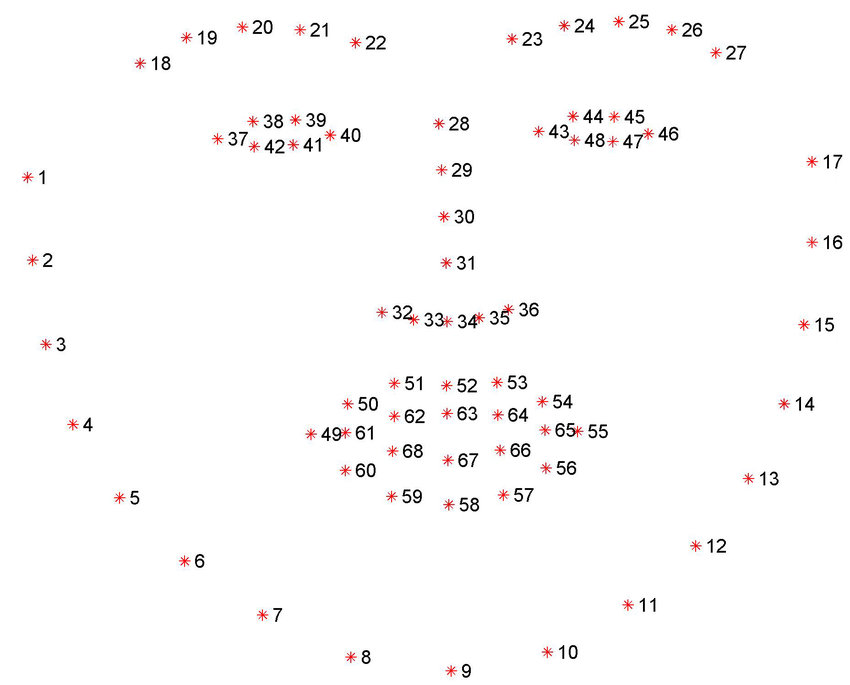

Researchers have identified as many as 68 facial landmarks. The following illustration shows the location of these landmarks.

Source: ResearchGate

Facial landmark detection is the task of detecting and tracking key facial landmarks, and transformations in these landmarks caused due to head movements and facial expressions.

Some applications of facial landmark detection include face swapping, head pose detection, facial gesture detection, and gaze estimation.

Visual Sentiment AnalysisVisual sentiment analysis is a new field in artificial intelligence in which computers analyze and understand facial expressions, gestures, intonation, and other non-verbal forms of human expression, to determine a person’s emotional state.

Visual sentiment analysis relies on computer vision technology, with a particular focus on face analysis, to analyze the shape of faces in images and videos and derive the emotional state of an individual. Current models are based on convolutional neural networks (CNNs) or support vector machines (SVM).

These models typically operate in four steps to perform visual sentiment analysis based on an image of a person:

1. Detecting faces in the image or video frame using state of the art face detection techniques.

2. When a face is detected, pre-process image data before sending it to the emotion classifier. Image preprocessing involves normalizing images, reducing noise, smoothing, optimizing image rotation, resizing, cropping, and adapting to lighting and other image conditions.

3. Processed face images are provided to the emotion classifier, which might use features like analyzing facial action units (AUs), facial landmark motions, distances between facial landmarks, gradation features, and facial textures.

4. Based on these features, the classifier assigns an emotion category such as “sad”, “happy” or “neutral” to the face in the image.

Age EstimationAge estimation processes enable machines to determine a person’s age accAZrding to biometric features, such as human faces.

Automatic facial age estimation employs dedicated algorithms to determine age according to features derived from face images. Face image interpretation includes various processes, including face detection, feature vector formulation, classification, and the location of facial characteristics.

Age estimation systems can create the following types of output:

1. An estimate of the person’s exact age.

2. The person’s age group.

3. A binary result that indicates a certain age range.

Age-group classification is commonly used because many scenarios require only a rough estimate of the subject’s age. However, systems may not be able to determine all age ranges if trained to deal mainly with a specific age range.

Age estimation challenges

Age estimation encounters similar problems to other face image interpretations, such as face recognition, face detection, and gender recognition. Automatic age estimation is negatively affected by facial appearance deformations caused by factors such as:

1. Inter-person variation

2. Different expressions

3. Lighting variation

4. Occlusions

5. Face orientation

In addition to these face image interpretation challenges, age estimation is also affected by the following challenges:

1. Limited inter-age group variation—occurs when appearance differences between adjacent age groups are negligible.

2. Diversity of aging variation—occurs when the type of age-related effects and the aging rate differ between individuals.

3. Dependence on external factors—occurs when external factors, such as health conditions, psychology, and lifestyle, influence the aging rate pattern adopted by an individual.

4. Data availability—occurs when there are no suitable datasets for training and testing. A suitable dataset for age estimation must contain multiple images displaying the same subject at different ages.

Face Analysis vs. Face RecognitionFace analysis and face recognition are two activities that usually occur after face detection. Face detection is a computer vision task in which an algorithm attempts to identify faces in an image. Face detection algorithms provide the bounding boxes of the faces they manage to detect.

When a face is detected, a computer vision system might perform:

1. Face recognition—identifying who is the person shown in the image, or verifying a known identity. Applications of face recognition include biometric identification and automated tagging of people in social media images.

2. Facial analysis—extracting information from the face image, such as demographics, emotions, engagement, age, and gender. Applications of facial analysis include age detection, gender detection, and sentiment analysis.

Face Analysis Use CasesFace Verification

Face verification is a security mechanism that enables digital verification of identity. Traditional verification involved showing an identification document (ID) to a security guard or seller. This type of verification is not possible online.

Governments and businesses providing digital services require verification. Common verification includes knowledge-based security like passwords, device-based security like tokens and mobile phones, and biometrics like fingerprints and irises. However, these mechanisms are not entirely secure.

Threat actors can steal and guess passwords or gain unauthorized access to lost or stolen devices. Additionally, not all biometric identifiers are included in IDs, passports, and driver’s licenses. Photos that include the person’s face, on the other hand, are included in most IDs.

Face verification enables customers to prove that they are the holders of their ID and assert that they are truly present during the facial scan. Unlike images that can be stolen, face verification provides live proof of the person’s presence and identity.

In-Cabin AutomotiveThere is a growing need for computer vision systems that can monitor individuals in a car while driving. The objective of these systems is to identify when a driver falls asleep, is not focused on the road, or performs other errors while driving, and alerting them to prevent accidents.

Face analysis applications for in-cabin automotive environments perform tasks like keypoint estimation, gaze analysis, and hand pose analysis, to accurately analyze the in-cabin environment.

Beauty ApplicationsFace analysis is entering use in the beauty industry. Face analysis algorithms are being developed to suggest how to enhance the beauty of a face through makeup, including specific applications and techniques, while taking into account the shape of the face, hair color, hairstyles, and other stylistic elements.

Like a human cosmetologist, a beauty-enhancing algorithm is trained to recognize the existing features of the face, enhance those features that humans consider desirable and mask those features that humans consider undesirable.

Smart OfficeThe smart office is a set of advanced technologies including intelligent communication and conferencing tools. These tools leverage face recognition, attention analytics, and gesture recognition models to support the hybrid work environment. The goal is to help teams communicate and collaborate more effectively.

Smart office face analysis algorithms must analyze the conference room and interactions between humans in the environment, and identify important human activities such as sitting, standing, speaking, and gesturing. In addition, some systems are able to recognize objects like whiteboards, post-it notes, and blackboards, and identify when humans are interacting with these objects.

Source: datagen.tech

Original Content:

https://datagen.tech/guides/face-recognition/face-analysis/