NVIDIA today unveiled powerful new GPU hardware to serve as the key building blocks for its vision to transform data centers into “AI factories” unleashing new frontiers in technical computing. That includes the new Hopper architecture and H100 GPUs.

By Rich Miller

Rows of NVIDIA DGX systems supported by NVIDIA Spectrum-4 , a 400Gbps end-to-end networking platform. (Image: NVIDIA)

Rows of NVIDIA DGX systems supported by NVIDIA Spectrum-4 , a 400Gbps end-to-end networking platform. (Image: NVIDIA)NVIDIA today unveiled powerful new hardware to serve as the key building blocks for its vision to transform data centers into “AI factories,” unleashing new frontiers in technical computing.

In the keynote of NVIDIA’s GTC 2022 conference, CEO Jensen Huang said AI applications are driving fundamental changes in data center design.

“AI data centers process mountains of continuous data to train and refine AI models,” Huang said. “Raw data comes in, is refined, and intelligence goes out — companies are manufacturing intelligence and operating giant AI factories.”

To power this transformation, NVIDIA unveiled its new Hopper GPU architecture and H100 GPU, along with new systems that will optimize the new hardware for massive computing tasks – like creating digital twins of million square foot Amazon warehouses, which will make it easier to train robotic systems to manage these facilities.

Huang is a key visionary in high performance computing, who sees technical computing as a world-changing undertaking. That came across in his GTC keynote, as Huang shared a vision of a future in which intelligence is created on an industrial scale and woven into real and virtual worlds.

That includes climate change, as Huang shared an update on NVIDIA’s plan to create Earth-2 – a digital twin for the entire earth that can be used to model climate solutions at unprecedented scale.

“Scientists predict that a supercomputer a billion times larger than today’s is needed to effectively simulate regional climate change,” Huang said. “NVIDIA is going to tackle this grand challenge with our Earth-2, the world’s first AI digital twin supercomputer, and invent new AI and computing technologies to give us a billion-X before it’s too late.”

Hopper Architecture Succeeds Ampere GPUsIt’s a big vision, but Huang and NVIDIA have a track record of major innovation in high performance computing (HPC). NVIDIA’s graphics processing (GPU) technology has been a key driver in the rise of specialized computing, enabling new workloads in supercomputing, artificial intelligence (AI) and connected cars.

The adoption of NVIDIA GPUs has also boosted the power density of racks inside data centers, which in many cases has required additional cooling to manage the heat within IT environments.

NVIDIA has been investing heavily in innovation in AI, which it sees as a pervasive technology trend that will bring its GPU technology into every area of the economy and society. The company’s AI platform is now used by 25,000 companies around the globe.

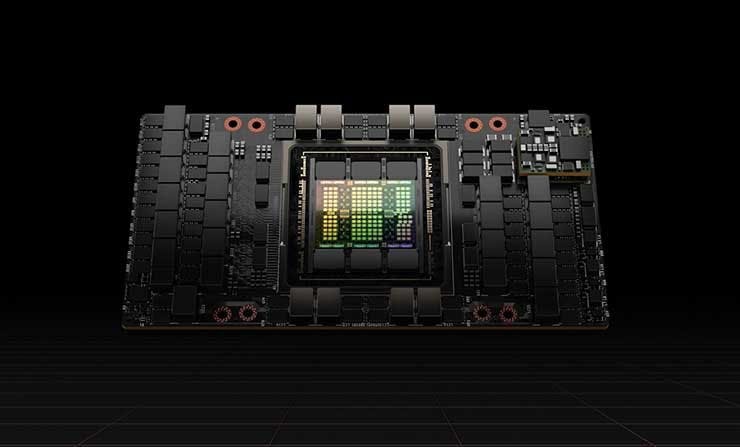

The NVIDIA H100, the first CPU chip using the new Hopper architecture. (Image: NVIDIA)

The NVIDIA H100, the first CPU chip using the new Hopper architecture. (Image: NVIDIA)The NVIDIA H100, the first CPU chip using the new Hopper architecture. (Image: NVIDIA)

NVIDIA’s Hopper architecture, named for pioneering computer scientist Grace Hopper, brings new levels of computing power. Hopper succeeds the NVIDIA Ampere architecture, launched two years ago.

The first Hopper-based GPU is the H100, featuring more than 80 billion transistors. It was created using the 4-nanometer process technology from chip foundry TSMC. “Hopper H100 is the biggest generational leap ever — 9x at-scale training performance over A100 and 30x large-language-model inference throughput,” Huang said.

Hopper is optimized to accelerate workloads using Transformer, a deep learning model developed by Google that enables researchers to work with larger datasets in tasks like natural language processing and computer vision. The H100 includes a Transformer Engine that NVIDIA says can dramatically speed these projects without losing accuracy.

“The Transformer model is now the dominant building block for neural networks,” said Paresh Kharya, senior director of product management and marketing for accelerated computing at NVIDIA. “The computing required to train large Transformer models has been exploding.”

Cloud platforms planning to integrate the Hopper architecture into their offerings Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud, along with Alibaba, Baidu and Tencent Cloud.

Leading systems makers planning servers with H100 accelerators including Cisco, Dell Technologies, Fujitsu, Hewlett Packard Enterprise, Inspur, Lenovo, Supermicro and a wide range of HPC-focused server venders.

More Powerful DGX Systems Coming to Data CentersThe H100 will be integrated into the fourth-generation NVIDIA DGX, the “supercomputer in a box” designed for deployments in traditional data center environments, including colocation providers in NVIDIA’s DGX-Ready program. Each DGX system will feature eight H100 GPUs per system, providing 32 petaflops of AI performance, the company said.

The DGX H100 will be available in late 2022, and is expected to require up to 10.2 kilowatts of power in a 6 rack unit system, a meaningful increase from the 6.5 kWs in its predecessor, the DGX A100. A typical data center rack would be able to physically support up to five DGX units, but only if its environment can handle up to 50 kWs of density.

That’s why Huang’s vision of data centers as “AI factories” will require advanced cooling technologies, which could include optimized air-cooled containment systems, or a range of liquid cooling options.

In other announcements from GTC:

NVIDIA is developing Omniverse Cloud, a suite of cloud services that gives artists, creators, designers and developers access to the NVIDIA Omniverse platform for 3D design and simulation. The service is currently under development, and NVIDIA did not share a launch date, or indicate where its cloud infrastructure would reside. NVIDIA operates online operations through both in-house data centers and leased space at wholesale providers. Industry reports indicate NVIDIA leased about 14 megawatts of new data center capacity in 2021.

NVIDIA announced NVIDIA Spectrum-4, the next generation of its Ethernet platform. The 400Gbps end-to-end networking platform provides 4x higher switching throughput than previous generations, with 51.2 terabits per second, the company said.

Expanded configurations are in development for the NVIDIA Grace CPU, which was announced last year, is based on the energy-efficient Arm microarchitecture found in billions of smartphones and edge computing devices. At GTC 2022, NVIDIA said Grace will be available as a “superchip” with two CPU chips connected over a low-latency chip-to-chip interconnect.

The company announced NVIDIA OVX a computing system designed to power large-scale digital twin simulations that will run within NVIDIA Omniverse.

With its focus on digital twins, NVIDIA is offering a different and specific vision for the metaverse technologies, which have been a hot topic since Facebook rebranded to Meta last fall. As analysts and users struggle with the value proposition of a virtual social network, NVIDIA’s vision for Omniverse-powered digital twins comes with a clearer vision for how 3D worlds can translate into concrete gains in business and science.

NVIDIA says OVX will enable designers and engineers to build physically accurate digital twins of buildings. Companies can evaluate and test complex systems and processes, with multiple autonomous systems interacting to optimize factories and warehouses, or train robots and autonomous vehicles before deploying them in the physical world.

“Physically accurate digital twins are the future of how we design and build,” said Bob Pette, vice president of Professional Visualization at NVIDIA. “Digital twins will change how every industry and company plans. The OVX portfolio of systems will be able to power true, real-time, always-synchronous, industrial-scale digital twins across industries.”

In his keynote, Huang shared how Amazon uses Omniverse Enterprise to design and optimize their fulfillment operations.

“Modern fulfillment centers are evolving into technical marvels — facilities operated by humans and robots working together,” Huang said. “AI will revolutionize all industries.”

Source: datacenterfrontier

Original Content:

https://shorturl.at/jmnY5