By Romain Bouges

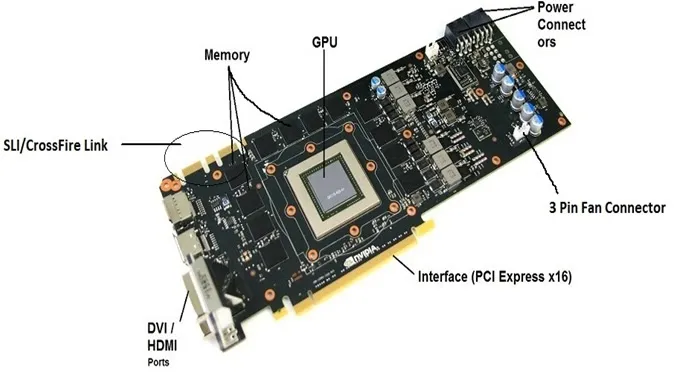

Typical GPU setup with Input, Output and memory modules.

Typical GPU setup with Input, Output and memory modules.In deep learning, hardware acceleration is the use of computer hardware designed to speed up artificial intelligence applications greater than what would be possible with a software running on a general-purpose central processing unit (CPU). Machine learning applications(1) such as artificial neural networks, machine vision and inference(2) are especially concerned.

We will review the most common type of material used in nowadays deep learning applications and open the discussion on promising technologies to come.

General purpose integrated circuit: Graphical Processing Unit (GPU)

Graphical Processing Units (GPUs) is a general-purpose solution that will be preferred to CPUs for several reasons.

GPUs allows to have thousands of threads in parallel (vs single thread performance optimization), hundreds of simpler cores (vs a few complex) and allocate most of the die surface for simple integer and floating point operations (vs more complex operations such as Instruction Level Parallelism). Numerous simpler operations will require less power to be carried out, which is another parameters to be taken into consideration.(3)

CPUs could be compared as a fast and fuel consuming sport car carrying a small load to a slow and fuel efficient freight truck with a large load.(4)

Specific purpose integrated circuits: Field-Programmable Gate Array (FPGA)

The advantage of this kind of specific purpose integrated circuit compared to a general-purpose one is its flexbility: after manufacturing it can be programmed to implement virtually any possible design and be more suited to the application on hand compared to a GPU.

This kind of hardware is also designed keeping in mind general purpose applications to be used for particular settings. (5)

It can be designed as a prototype to later design an Application-Specific Integrated Circuit.

Further improvements: dedicated Application-Specific Integrated Circuits (ASICs)

Those integrated circuit will be specially designed for an application without the possibility to reprogram it. It will usually increase its troughtput by a factor of 10 (1) (performance-per-watt improvement over off-the-shelf solutions when dealing with machine learning tasks) and/or will be more consumption efficient. The size Amazon, Google and Facebook among others are developping such circuits for their own use.(6)(7)

References

(1) Google boosts machine learning with its tensor processing unit.

https://techreport.com/news/30155/google-boosts-machine-learning-with-its-tensor-processing-unit/(2) Project Brainwave.

https://www.microsoft.com/en-us/research/project/project-brainwave/(3) Do we really need gpu for deep-learning ?

https://medium.com/@shachishah.ce/do-we-really-need-gpu-for-deep-learning-47042c02efe2/(4) GPUs necessary for deep learning.

https://www.analyticsvidhya.com/blog/2017/05/gpus-necessary-for-deep-learning/(5) A Survey of FPGA-based Accelerators for Convolutional Neural Networks.

https://www.academia.edu/37491583/A_Survey_of_FPGA-based_Accelerators_for_Convolutional_Neural_Networks(6) An Updated Survey of Efficient Hardware Architectures for Accelerating Deep Convolutional Neural Networks. Page 9 — Spatial Architectures: Fpgas and Asics.

https://www.mdpi.com/1999-5903/12/7/113/pdf(7) Facebook joins Amazon and Google in AI chip race.

https://www.ft.com/content/1c2aab18-3337-11e9-bd3a-8b2a211d90d5/Source: medium.com

Original Content:

https://shorturl.at/dESV3